Large Language Models

Learning outcomes

From this module, you will be able to

Language models activity

Each of you will receive a sticky note with a word on it. Here’s what you’ll do:

- Carefully remove the sticky note to see the word. This word is for your eyes only —- no peeking, neighbours!

- Think quickly: what word would logically follow the word on the sticky note? Write this next word on a new sticky note.

- You have about 20 seconds for this step, so trust your instincts!

- Pass your predicted word to the person next to you. Do not pass the word you received from your neighbour forward. Keep the chain going!

- Stop after the last person in your row has finished.

- Finally, one person from your row will enter the collective sentence into our Google Doc.

Markov model of language

- You’ve just created a simple Markov model of language!

- In predicting the next word from a minimal context, you likely used your linguistic intuition and familiarity with common two-word phrases or collocations.

- You could create more coherent sentences by taking into account more context e.g., previous two words or four words or 100 words.

- This idea was first used by Shannon for characters in The Shannon’s game. See this video by Jordan Boyd-Graber for more information on this.

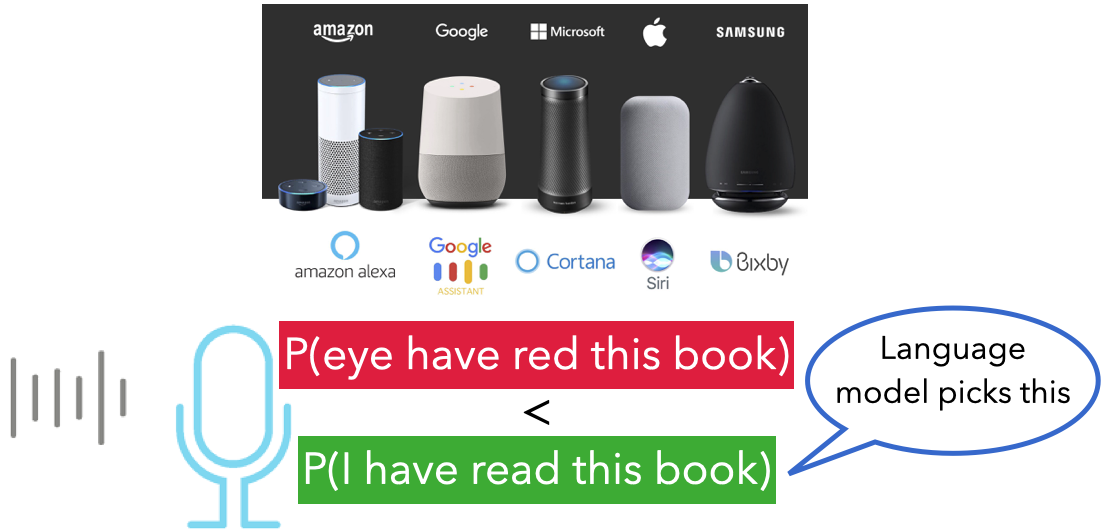

Applications of predicting next word

One of the most common applications for predicting the next word is the ‘smart compose’ feature in your emails, text messages, and search engines.

Language model

A language model computes the probability distribution over sequences (of words or characters). Intuitively, this probability tells us how “good” or plausible a sequence of words is.

Check out this recent BMO ad.

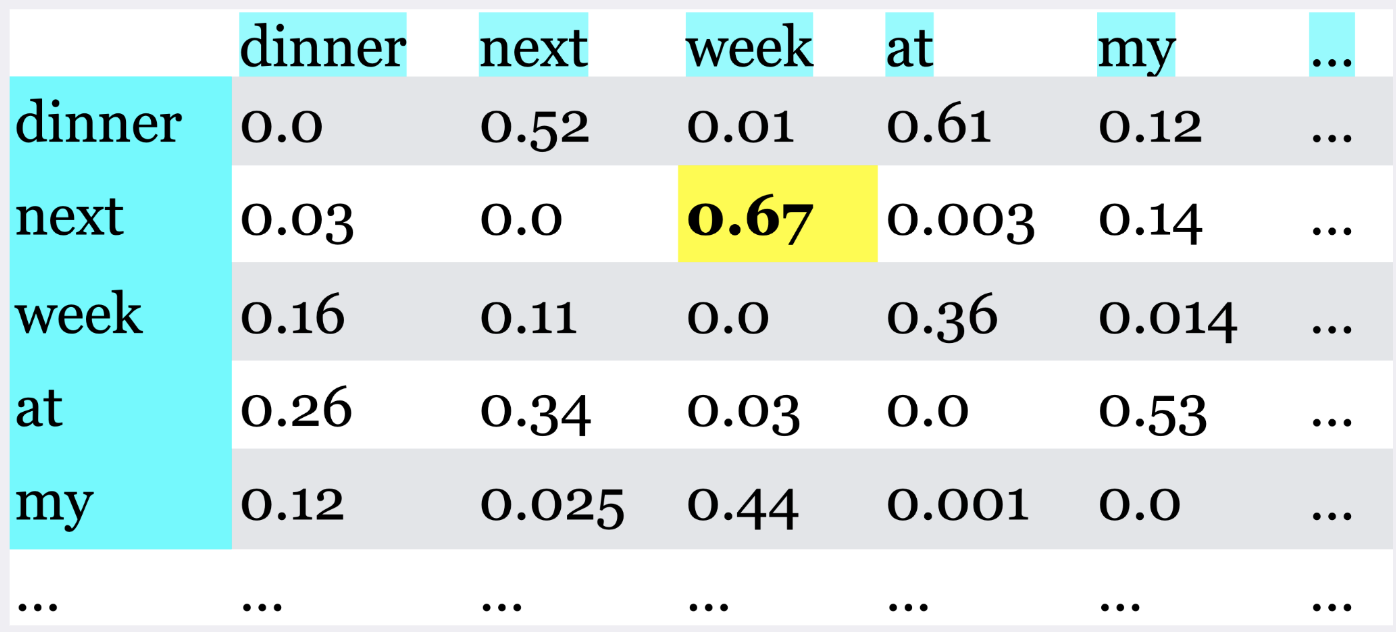

A simple model of language

- Calculate the co-occurrence frequencies and probabilities based on these frequencies

- Predict the next word based on these probabilities

- This is a Markov model of language.

Long-distance dependencies

What are some reasonable predictions for the next word in the sequence?

I am studying law at the University of British Columbia Point Grey campus in Vancouver because I want to work as a ___

Markov model is unable to capture such long-distance dependencies in language.

Transformer models

Enter attention and transformer models! Transformer models are at the core of all state-of-the-art Generative AI models (e.g., BERT, GPT3, GPT4, Gemini, DALL-E, Llama, Github Copilot)?

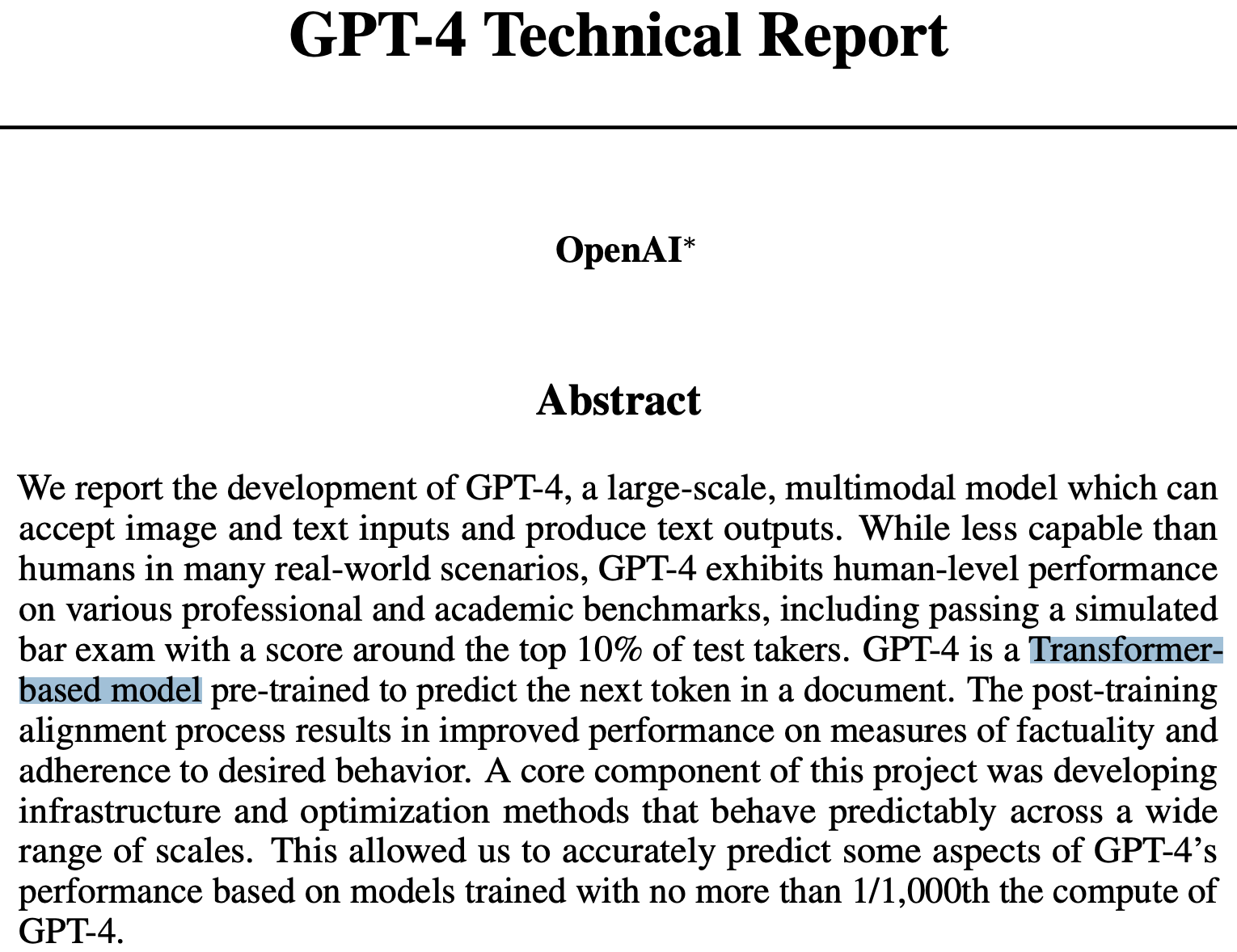

Transformer models

Source: GPT-4 Technical Report

Self-attention

- An important innovation which makes these models work so well is self-attention.

- Count how many times the players wearing the white pass the basketball?

Self-attention

When we process information, we often selectively focus on specific parts of the input, giving more attention to relevant information and less attention to irrelevant information. This is the core idea of attention.

Consider the examples below:

Example 1: She left a brief note on the kitchen table, reminding him to pick up groceries.

Example 2: The diplomat’s speech struck a positive note in the peace negotiations.

Example 3: She plucked the guitar strings, ending with a melancholic note.

The word note in these examples serves quite distinct meanings, each tied to different contexts. To capture varying word meanings across different contexts, we need a mechanism that considers the wider context to compute each word’s contextual representation.

- Self-attention is just that mechanism!

Using LLMs in your applications

- There are several Python libraries available which allow us to use pre-trained LLMs in our applications.

Types of LLMs

If you want to use pre-trained LLMs, it’s useful to know that there are three main types of LLMs.

| Feature | Decoder-only (e.g., GPT-3) | Encoder-only (e.g., BERT, RoBERTa) | Encoder decoder (e.g., T5, BARD) |

|---|---|---|---|

| Output Computation is based on | Information earlier in the context | Entire context (bidirectional) | Encoded input context |

| Text Generation | Can naturally generate text completion | Cannot directly generate text | Can generate outputs naturally |

| Example | DSI ML workshop audience is ___ | DSI ML workshop audience is the best! → positive | Input: Translate to Mandarin: Long but productive day! Output: 漫长而富有成效的一天! |

Pipelines before LLMs

- Text preprocessing: Tokenization, stopword removal, stemming/lemmatization.

- Feature extraction: Bag of Words or word embeddings.

- Training: Supervised learning on a labeled dataset (e.g., with positive, negative, and neutral sentiment categories for sentiment analysis).

- Evaluation: Performance typically measured using accuracy, F1-score, etc.

- Main challenges:

- Extensive feature engineering required for good performance.

- Difficulty in capturing the nuances and context of sentiment, especially in complex sentences.

Pipelines after LLMs

from transformers import pipeline, AutoModelForTokenClassification, AutoTokenizer

# Sentiment analysis pipeline

analyzer = pipeline("sentiment-analysis", model='distilbert-base-uncased-finetuned-sst-2-english')

analyzer(["I asked my model to predict my future, and it said '404: Life not found.'",

'''Machine learning is just like cooking—sometimes you follow the recipe,

and other times you just hope for the best!.'''])[{'label': 'NEGATIVE', 'score': 0.995707631111145},

{'label': 'POSITIVE', 'score': 0.9994770884513855}]Zero-shot learning

['i left with my bouquet of red and yellow tulips under my arm feeling slightly more optimistic than when i arrived',

'i was feeling a little vain when i did this one',

'i cant walk into a shop anywhere where i do not feel uncomfortable',

'i felt anger when at the end of a telephone call',

'i explain why i clung to a relationship with a boy who was in many ways immature and uncommitted despite the excitement i should have been feeling for getting accepted into the masters program at the university of virginia',

'i like to have the same breathless feeling as a reader eager to see what will happen next',

'i jest i feel grumpy tired and pre menstrual which i probably am but then again its only been a week and im about as fit as a walrus on vacation for the summer',

'i don t feel particularly agitated',

'i feel beautifully emotional knowing that these women of whom i knew just a handful were holding me and my baba on our journey',

'i pay attention it deepens into a feeling of being invaded and helpless',

'i just feel extremely comfortable with the group of people that i dont even need to hide myself',

'i find myself in the odd position of feeling supportive of']Zero-shot learning for emotion detection

from transformers import AutoTokenizer

from transformers import pipeline

import torch

#Load the pretrained model

model_name = "facebook/bart-large-mnli"

classifier = pipeline('zero-shot-classification', model=model_name)

exs = dataset["test"]["text"][:10]

candidate_labels = ["sadness", "joy", "love","anger", "fear", "surprise"]

outputs = classifier(exs, candidate_labels)Zero-shot learning for emotion detection

| sequence | labels | scores | |

|---|---|---|---|

| 0 | im feeling rather rotten so im not very ambiti... | [sadness, anger, surprise, fear, joy, love] | [0.7367963194847107, 0.10041724890470505, 0.09... |

| 1 | im updating my blog because i feel shitty | [sadness, surprise, anger, fear, joy, love] | [0.7429757118225098, 0.13775932788848877, 0.05... |

| 2 | i never make her separate from me because i do... | [love, sadness, surprise, fear, anger, joy] | [0.3153635561466217, 0.22490309178829193, 0.19... |

| 3 | i left with my bouquet of red and yellow tulip... | [surprise, joy, love, sadness, fear, anger] | [0.4218205511569977, 0.3336699306964874, 0.217... |

| 4 | i was feeling a little vain when i did this one | [surprise, anger, fear, love, joy, sadness] | [0.5639422535896301, 0.17000222206115723, 0.08... |

| 5 | i cant walk into a shop anywhere where i do no... | [surprise, fear, sadness, anger, joy, love] | [0.3703343868255615, 0.365593284368515, 0.1426... |

| 6 | i felt anger when at the end of a telephone call | [anger, surprise, fear, sadness, joy, love] | [0.9760519862174988, 0.012534416280686855, 0.0... |

| 7 | i explain why i clung to a relationship with a... | [surprise, joy, love, sadness, fear, anger] | [0.43820157647132874, 0.23223187029361725, 0.1... |

| 8 | i like to have the same breathless feeling as ... | [surprise, joy, love, fear, anger, sadness] | [0.7675780057907104, 0.1384691596031189, 0.031... |

| 9 | i jest i feel grumpy tired and pre menstrual w... | [surprise, sadness, anger, fear, joy, love] | [0.7340186834335327, 0.11860276013612747, 0.07... |

Harms of large language models

While these models are super powerful and useful, be mindful of the harms caused by these models. Some of the harms as summarized here are:

- performance disparties

- social biases and stereotypes

- toxicity

- misinformation

- security and privacy risks

- copyright and legal protections

- environmental impact

- centralization of power

Thank you!

That’s it for the modules! Now, let’s begin the lab session.

Thank you for your engagement! We would love your feedback. Here is the survey link: