| Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio | Target |

|---|---|---|---|---|---|---|---|---|---|

| 40 | 14.5 | 6.4 | 358 | 50 | 75 | 5.7 | 2.1 | 0.50 | Disease |

| 33 | 0.7 | 0.2 | 256 | 21 | 30 | 8.5 | 3.9 | 0.80 | Disease |

| 24 | 0.7 | 0.2 | 188 | 11 | 10 | 5.5 | 2.3 | 0.71 | No Disease |

| 60 | 0.7 | 0.2 | 171 | 31 | 26 | 7.0 | 3.5 | 1.00 | No Disease |

| 18 | 0.8 | 0.2 | 199 | 34 | 31 | 6.5 | 3.5 | 1.16 | No Disease |

Introduction to Machine Learning

🎯 Learning Outcomes

By the end of this module, you will be able to:

- Explain the difference between AI, ML, and DL

- Describe what machine learning is and when it is appropriate to use ML-based solutions.

- Identify different types of machine learning problems, such as classification, regression, clustering, and time series forecasting.

- Recognize common data types in machine learning, including tabular, text, and image data.

- Evaluate whether a machine learning solution is suitable for your problem or whether a rule-based or human-expert solution is more appropriate.

Which cat do you think is AI-generated?

- A

- B

- Both

- None

- What clues did you use to decide?

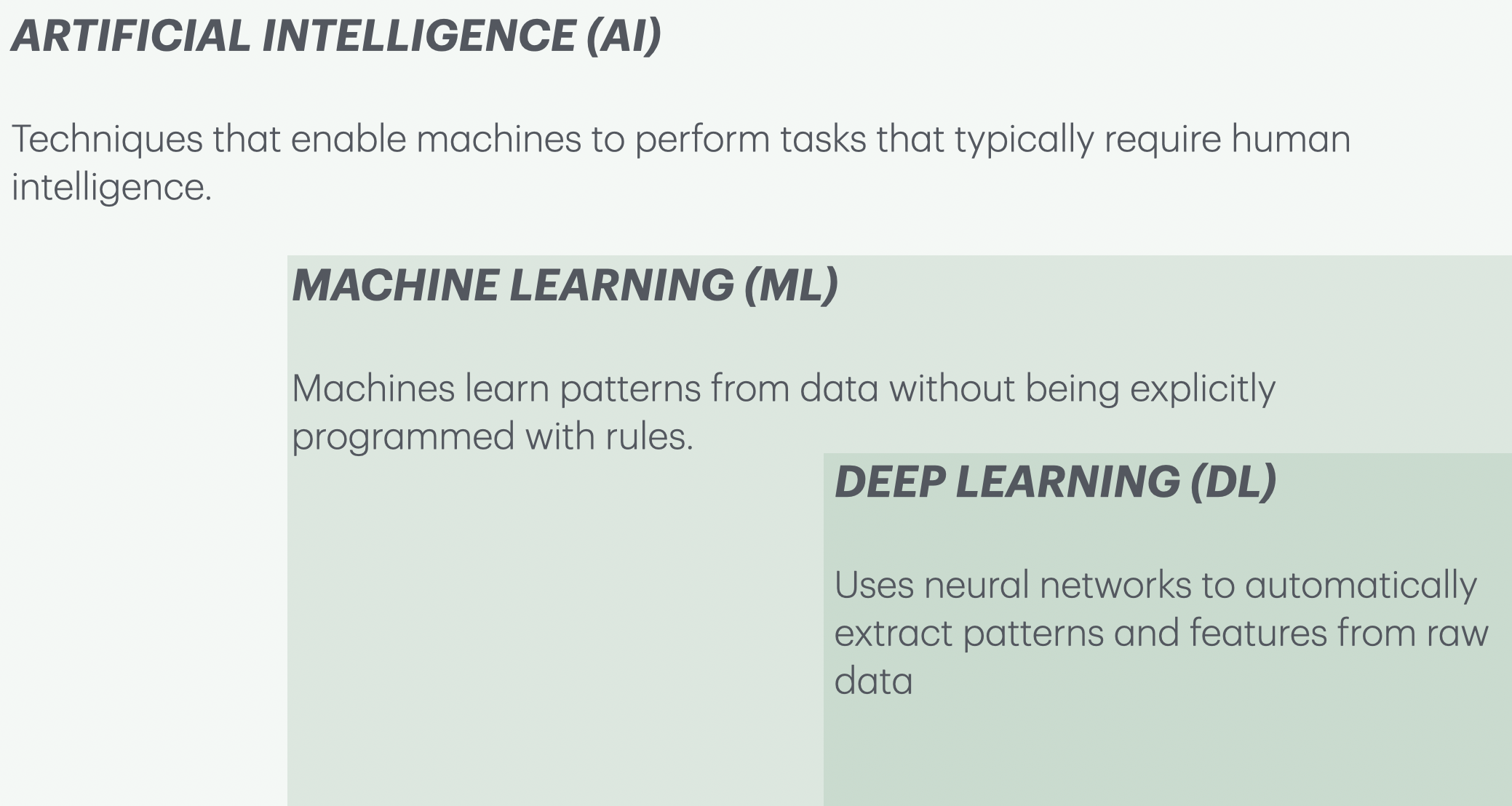

What is AI, ML, DL?

- Artificial Intelligence (AI): Making computers act smart

- Machine Learning (ML): Learning patterns from data

- Deep Learning (DL): Using neural networks to learn complex patterns

Let’s walk through an example

- Have you used search in Google Photos? You can search for “cat” and it will retrieve photos from your libraries containing cats.

- This can be done using image classification.

Image classification

- Imagine we want a system that can tell cats and foxes apart.

- How might we do this with traditional programming? With ML?

| Image ID | Whiskers Present | Ear Size | Face Shape | Fur Color | Eye Shape | Label |

|---|---|---|---|---|---|---|

| 1 | Yes | Small | Round | Mixed | Round | Cat |

| 2 | Yes | Medium | Round | Brown | Almond | Cat |

| 3 | Yes | Large | Pointed | Red | Narrow | Fox |

| 4 | Yes | Large | Pointed | Red | Narrow | Fox |

| 5 | Yes | Small | Round | Mixed | Round | Cat |

| 6 | Yes | Large | Pointed | Red | Narrow | Fox |

| 7 | Yes | Small | Round | Grey | Round | Cat |

| 8 | Yes | Small | Round | Black | Round | Cat |

| 9 | Yes | Large | Pointed | Red | Narrow | Fox |

Traditional programming: example

- You hard-code rules. If all of the following satisfy, it’s a fox.

- pointed face ✅

- red fur ✅

- narrow eyes ✅

- This works for normal cases, but what if there are exceptions

ML approach: example

- We don’t tell the model the exact rule. Instead, we give it many labeled images, and it learns probabilistic patterns across multiple features, not rigid rules.

- If fur is red → 90% chance of Fox.

DL approach: example

- A neural network automatically learns which features to look at (edges → textures → objects).

- No need to even specify face shape or fur colour. It learns relevant features on its own.

What is ML?

- Learn patterns from examples (data)

- Make predictions, identify patterns, generate content

- ML systems improve over time with more data

- No single model fits all problems

When to use ML?

- When the problem can’t be solved with a fixed set of rules

- When you have lots of data and complex relationships

- When human decision-making is too slow or inconsistent

| Approach | Best for |

|---|---|

| Traditional Programming | Rules are known, data is clean/predictable |

| Machine Learning | Rules are complex/unknown, data is noisy |

When to use Machine Learning (ML) solutions?

Example: Supervised classification

- We want to predict liver disease from tabular features:

Model training

from lightgbm.sklearn import LGBMClassifier

model = LGBMClassifier(random_state=123, verbose=-1)

model.fit(X_train, y_train)LGBMClassifier(random_state=123, verbose=-1)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| boosting_type | 'gbdt' | |

| num_leaves | 31 | |

| max_depth | -1 | |

| learning_rate | 0.1 | |

| n_estimators | 100 | |

| subsample_for_bin | 200000 | |

| objective | None | |

| class_weight | None | |

| min_split_gain | 0.0 | |

| min_child_weight | 0.001 | |

| min_child_samples | 20 | |

| subsample | 1.0 | |

| subsample_freq | 0 | |

| colsample_bytree | 1.0 | |

| reg_alpha | 0.0 | |

| reg_lambda | 0.0 | |

| random_state | 123 | |

| n_jobs | None | |

| importance_type | 'split' | |

| verbose | -1 |

New examples

- Given features of new patients below we’ll use this model to predict whether these patients have the liver disease or not.

| Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio |

|---|---|---|---|---|---|---|---|---|

| 19 | 1.4 | 0.8 | 178 | 13 | 26 | 8.0 | 4.6 | 1.30 |

| 12 | 1.0 | 0.2 | 719 | 157 | 108 | 7.2 | 3.7 | 1.00 |

| 60 | 5.7 | 2.8 | 214 | 412 | 850 | 7.3 | 3.2 | 0.78 |

| 42 | 0.5 | 0.1 | 162 | 155 | 108 | 8.1 | 4.0 | 0.90 |

Model predictions on new examples

- Let’s examine predictions

pred_df = pd.DataFrame({"Predicted_target": model.predict(X_test).tolist()})

df_concat = pd.concat([pred_df, X_test.reset_index(drop=True)], axis=1)

HTML(df_concat.to_html(index=False))| Predicted_target | Age | Total_Bilirubin | Direct_Bilirubin | Alkaline_Phosphotase | Alamine_Aminotransferase | Aspartate_Aminotransferase | Total_Protiens | Albumin | Albumin_and_Globulin_Ratio |

|---|---|---|---|---|---|---|---|---|---|

| No Disease | 19 | 1.4 | 0.8 | 178 | 13 | 26 | 8.0 | 4.6 | 1.30 |

| Disease | 12 | 1.0 | 0.2 | 719 | 157 | 108 | 7.2 | 3.7 | 1.00 |

| Disease | 60 | 5.7 | 2.8 | 214 | 412 | 850 | 7.3 | 3.2 | 0.78 |

| Disease | 42 | 0.5 | 0.1 | 162 | 155 | 108 | 8.1 | 4.0 | 0.90 |

Example: Supervised regression

Suppose we want to predict housing prices given a number of attributes associated with houses. The target here is continuous and not discrete.

| target | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 509000.0 | 2 | 1.50 | 1930 | 3521 | 2.0 | 0 | 0 | 3 | 8 | 1930 | 0 | 1989 | 0 | 98007 | 47.6092 | -122.146 | 1840 | 3576 |

| 675000.0 | 5 | 2.75 | 2570 | 12906 | 2.0 | 0 | 0 | 3 | 8 | 2570 | 0 | 1987 | 0 | 98075 | 47.5814 | -122.050 | 2580 | 12927 |

| 420000.0 | 3 | 1.00 | 1150 | 5120 | 1.0 | 0 | 0 | 4 | 6 | 800 | 350 | 1946 | 0 | 98116 | 47.5588 | -122.392 | 1220 | 5120 |

| 680000.0 | 8 | 2.75 | 2530 | 4800 | 2.0 | 0 | 0 | 4 | 7 | 1390 | 1140 | 1901 | 0 | 98112 | 47.6241 | -122.305 | 1540 | 4800 |

| 357823.0 | 3 | 1.50 | 1240 | 9196 | 1.0 | 0 | 0 | 3 | 8 | 1240 | 0 | 1968 | 0 | 98072 | 47.7562 | -122.094 | 1690 | 10800 |

Building a regression model

Predicting prices of unseen houses

pred_df = pd.DataFrame(

{"Predicted_target": model.predict(X_test[0:4]).tolist()}

)

df_concat = pd.concat([pred_df, X_test[0:4].reset_index(drop=True)], axis=1)

HTML(df_concat.to_html(index=False))| Predicted_target | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 345831.740542 | 4 | 2.25 | 2130 | 8078 | 1.0 | 0 | 0 | 4 | 7 | 1380 | 750 | 1977 | 0 | 98055 | 47.4482 | -122.209 | 2300 | 8112 |

| 601042.018745 | 3 | 2.50 | 2210 | 7620 | 2.0 | 0 | 0 | 3 | 8 | 2210 | 0 | 1994 | 0 | 98052 | 47.6938 | -122.130 | 1920 | 7440 |

| 311310.186024 | 4 | 1.50 | 1800 | 9576 | 1.0 | 0 | 0 | 4 | 7 | 1800 | 0 | 1977 | 0 | 98045 | 47.4664 | -121.747 | 1370 | 9576 |

| 597555.592401 | 3 | 2.50 | 1580 | 1321 | 2.0 | 0 | 2 | 3 | 8 | 1080 | 500 | 2014 | 0 | 98107 | 47.6688 | -122.402 | 1530 | 1357 |

We are predicting continuous values here as apposed to discrete values in disease vs. no disease example.

Text data

Example: Text classification

- Suppose you are given some data with labeled spam and non-spam messages and you want to predict whether a new message is spam or not spam.

| target | sms |

|---|---|

| spam | LookAtMe!: Thanks for your purchase of a video clip from LookAtMe!, you've been charged 35p. Think you can do better? Why not send a video in a MMSto 32323. |

| ham | Aight, I'll hit you up when I get some cash |

| ham | Don no da:)whats you plan? |

| ham | Going to take your babe out ? |

| ham | No need lar. Jus testing e phone card. Dunno network not gd i thk. Me waiting 4 my sis 2 finish bathing so i can bathe. Dun disturb u liao u cleaning ur room. |

Let’s train a model

X_train, y_train = train_df["sms"], train_df["target"]

X_test, y_test = test_df["sms"], test_df["target"]

clf = make_pipeline(CountVectorizer(max_features=5000), LogisticRegression(max_iter=5000))

clf.fit(X_train, y_train) # Training the modelPipeline(steps=[('countvectorizer', CountVectorizer(max_features=5000)),

('logisticregression', LogisticRegression(max_iter=5000))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('countvectorizer', ...), ('logisticregression', ...)] | |

| transform_input | None | |

| memory | None | |

| verbose | False |

Parameters

| input | 'content' | |

| encoding | 'utf-8' | |

| decode_error | 'strict' | |

| strip_accents | None | |

| lowercase | True | |

| preprocessor | None | |

| tokenizer | None | |

| stop_words | None | |

| token_pattern | '(?u)\\b\\w\\w+\\b' | |

| ngram_range | (1, ...) | |

| analyzer | 'word' | |

| max_df | 1.0 | |

| min_df | 1 | |

| max_features | 5000 | |

| vocabulary | None | |

| binary | False | |

| dtype | <class 'numpy.int64'> |

Parameters

| penalty | 'l2' | |

| dual | False | |

| tol | 0.0001 | |

| C | 1.0 | |

| fit_intercept | True | |

| intercept_scaling | 1 | |

| class_weight | None | |

| random_state | None | |

| solver | 'lbfgs' | |

| max_iter | 5000 | |

| multi_class | 'deprecated' | |

| verbose | 0 | |

| warm_start | False | |

| n_jobs | None | |

| l1_ratio | None |

Unseen messages

- Now use the trained model to predict targets of unseen messages:

| sms | |

|---|---|

| 3245 | Funny fact Nobody teaches volcanoes 2 erupt, tsunamis 2 arise, hurricanes 2 sway aroundn no 1 teaches hw 2 choose a wife Natural disasters just happens |

| 944 | I sent my scores to sophas and i had to do secondary application for a few schools. I think if you are thinking of applying, do a research on cost also. Contact joke ogunrinde, her school is one m... |

| 1044 | We know someone who you know that fancies you. Call 09058097218 to find out who. POBox 6, LS15HB 150p |

| 2484 | Only if you promise your getting out as SOON as you can. And you'll text me in the morning to let me know you made it in ok. |

Predicting on unseen data

The model is accurately predicting labels for the unseen text messages above!

| sms | spam_predictions | |

|---|---|---|

| 3245 | Funny fact Nobody teaches volcanoes 2 erupt, tsunamis 2 arise, hurricanes 2 sway aroundn no 1 teaches hw 2 choose a wife Natural disasters just happens | ham |

| 944 | I sent my scores to sophas and i had to do secondary application for a few schools. I think if you are thinking of applying, do a research on cost also. Contact joke ogunrinde, her school is one me the less expensive ones | ham |

| 1044 | We know someone who you know that fancies you. Call 09058097218 to find out who. POBox 6, LS15HB 150p | spam |

| 2484 | Only if you promise your getting out as SOON as you can. And you'll text me in the morning to let me know you made it in ok. | ham |

Examplel: Text classification with LLMs

from transformers import pipeline, AutoModelForTokenClassification, AutoTokenizer

# Sentiment analysis pipeline

analyzer = pipeline("sentiment-analysis", model='distilbert-base-uncased-finetuned-sst-2-english')

analyzer(["I asked my model to predict my future, and it said '404: Life not found.'",

'''Machine learning is just like cooking—sometimes you follow the recipe,

and other times you just hope for the best!.'''])[{'label': 'NEGATIVE', 'score': 0.995707631111145},

{'label': 'POSITIVE', 'score': 0.9994770884513855}]Zero-shot learning

- Now suppose you want to identify the emotion expressed in the text rather than just positive or negative.

['im feeling rather rotten so im not very ambitious right now',

'im updating my blog because i feel shitty',

'i never make her separate from me because i don t ever want her to feel like i m ashamed with her',

'i left with my bouquet of red and yellow tulips under my arm feeling slightly more optimistic than when i arrived',

'i was feeling a little vain when i did this one',

'i cant walk into a shop anywhere where i do not feel uncomfortable',

'i felt anger when at the end of a telephone call',

'i explain why i clung to a relationship with a boy who was in many ways immature and uncommitted despite the excitement i should have been feeling for getting accepted into the masters program at the university of virginia',

'i like to have the same breathless feeling as a reader eager to see what will happen next',

'i jest i feel grumpy tired and pre menstrual which i probably am but then again its only been a week and im about as fit as a walrus on vacation for the summer']Zero-shot learning for emotion detection

from transformers import AutoTokenizer

from transformers import pipeline

import torch

#Load the pretrained model

model_name = "facebook/bart-large-mnli"

classifier = pipeline('zero-shot-classification', model=model_name)

exs = dataset["test"]["text"][10:20]

candidate_labels = ["sadness", "joy", "love","anger", "fear", "surprise"]

outputs = classifier(exs, candidate_labels)Zero-shot learning for emotion detection

| sequence | labels | scores | |

|---|---|---|---|

| 0 | i don t feel particularly agitated | [surprise, anger, joy, sadness, fear, love] | [0.360086590051651, 0.3019047975540161, 0.11901281774044037, 0.11381449550390244, 0.060391612350940704, 0.04478970915079117] |

| 1 | i feel beautifully emotional knowing that these women of whom i knew just a handful were holding me and my baba on our journey | [joy, love, surprise, fear, sadness, anger] | [0.36994314193725586, 0.2887154817581177, 0.25607964396476746, 0.04292308911681175, 0.03344891220331192, 0.008889704942703247] |

| 2 | i pay attention it deepens into a feeling of being invaded and helpless | [fear, surprise, sadness, anger, joy, love] | [0.34146907925605774, 0.3088078498840332, 0.25616830587387085, 0.07989830523729324, 0.007844790816307068, 0.005811698734760284] |

| 3 | i just feel extremely comfortable with the group of people that i dont even need to hide myself | [joy, surprise, love, sadness, anger, fear] | [0.3305220901966095, 0.29472312331199646, 0.15343144536018372, 0.07691436260938644, 0.07596738636493683, 0.06844153255224228] |

| 4 | i find myself in the odd position of feeling supportive of | [surprise, joy, fear, love, sadness, anger] | [0.8287988901138306, 0.04317942634224892, 0.039773669093847275, 0.031413134187459946, 0.031412459909915924, 0.025422409176826477] |

| 5 | i was feeling as heartbroken as im sure katniss was | [sadness, surprise, fear, love, anger, joy] | [0.7667969465255737, 0.1818471997976303, 0.025871198624372482, 0.011756820604205132, 0.008171578869223595, 0.005556156858801842] |

| 6 | i feel a little mellow today | [surprise, joy, love, fear, sadness, anger] | [0.49373722076416016, 0.26321911811828613, 0.113678477704525, 0.06402145326137543, 0.050955016165971756, 0.01438874565064907] |

| 7 | i feel like my only role now would be to tear your sails with my pessimism and discontent | [sadness, anger, surprise, fear, joy, love] | [0.6992796063423157, 0.20048746466636658, 0.06185894086956978, 0.03287427872419357, 0.00364686525426805, 0.0018528560176491737] |

| 8 | i feel just bcoz a fight we get mad to each other n u wanna make a publicity n let the world knows about our fight | [anger, surprise, sadness, fear, joy, love] | [0.6029903292655945, 0.19827111065387726, 0.10198860615491867, 0.08116946369409561, 0.01011708565056324, 0.005463303066790104] |

| 9 | i feel like reds and purples are just so rich and kind of perfect | [joy, surprise, love, anger, fear, sadness] | [0.3644145727157593, 0.3051210045814514, 0.19462504982948303, 0.055566269904375076, 0.05413524806499481, 0.026137862354516983] |

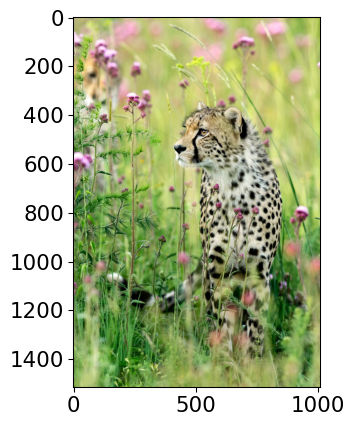

Image data

Example: Predicting labels of a given image

- Suppose you have a bunch of animal images. You do not have any labels associated with them and you want to predict labels of these images.

- We can use machine learning to predict labels of these images using a technique called transfer learning.

Class Probability score

tiger cat 0.636

tabby, tabby cat 0.174

Pembroke, Pembroke Welsh corgi 0.081

lynx, catamount 0.011

--------------------------------------------------------------

Class Probability score

cheetah, chetah, Acinonyx jubatus 0.994

leopard, Panthera pardus 0.005

jaguar, panther, Panthera onca, Felis onca 0.001

snow leopard, ounce, Panthera uncia 0.000

--------------------------------------------------------------

Class Probability score

macaque 0.885

patas, hussar monkey, Erythrocebus patas 0.062

proboscis monkey, Nasalis larvatus 0.015

titi, titi monkey 0.010

--------------------------------------------------------------

Class Probability score

Walker hound, Walker foxhound 0.582

English foxhound 0.144

beagle 0.068

EntleBucher 0.059

--------------------------------------------------------------:::

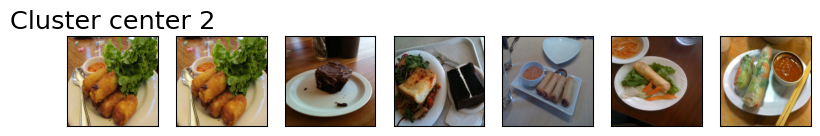

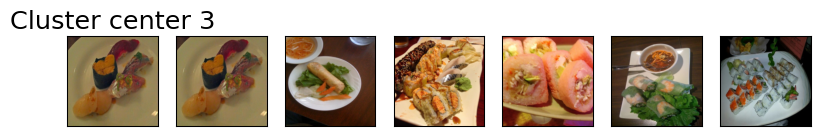

Clustering images

Finding groups in food images

K-Means on food dataset

Z_food = get_features_unsup(densenet, food_inputs)

k = 5

km = KMeans(n_clusters=k, n_init='auto', random_state=123)

km.fit(Z_food)KMeans(n_clusters=5, random_state=123)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| n_clusters | 5 | |

| init | 'k-means++' | |

| n_init | 'auto' | |

| max_iter | 300 | |

| tol | 0.0001 | |

| verbose | 0 | |

| random_state | 123 | |

| copy_x | True | |

| algorithm | 'lloyd' |

Examining food clusters

Example: Finding most similar items

- Consider the following titles and queries.

# Corpus of existing paper titles or abstracts

corpus = [

"Mapping eelgrass beds in British Columbia using remote sensing",

"Bayesian optimization for reaction discovery and yield improvement",

"RNA-seq analysis of microbiome interactions and infection susceptibility",

"Using YOLOv8 for automatic object detection in microscopy images",

"Anomaly detection in ocean temperature sensor data with machine learning",

"Embedding-based literature recommendation using scientific abstracts"

]

# List of new queries to compare

queries = [

"Predicting yield of chemical reactions using optimization techniques",

"Tracking changes in marine vegetation through drone imagery",

"Detecting patterns of infection from gene expression data",

"Literature discovery using sentence embeddings and neural search",

"Outlier detection in sensor measurements from ocean buoys"

]Which queries are similar to which titles?

from sentence_transformers import SentenceTransformer, util

import numpy as np

# Load the MiniLM model

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

# Encode corpus and queries

corpus_embeddings = model.encode(corpus, convert_to_tensor=True).cpu()

query_embeddings = model.encode(queries, convert_to_tensor=True).cpu()

# Set number of top matches to show

top_k = 1Which queries are similar to which titles?

🔍 Query 1: Predicting yield of chemical reactions using optimization techniques

➤ Match 1: Bayesian optimization for reaction discovery and yield improvement

Cosine similarity: 0.7420

🔍 Query 2: Tracking changes in marine vegetation through drone imagery

➤ Match 1: Mapping eelgrass beds in British Columbia using remote sensing

Cosine similarity: 0.5305

🔍 Query 3: Detecting patterns of infection from gene expression data

➤ Match 1: RNA-seq analysis of microbiome interactions and infection susceptibility

Cosine similarity: 0.4292

🔍 Query 4: Literature discovery using sentence embeddings and neural search

➤ Match 1: Embedding-based literature recommendation using scientific abstracts

Cosine similarity: 0.5649

🔍 Query 5: Outlier detection in sensor measurements from ocean buoys

➤ Match 1: Anomaly detection in ocean temperature sensor data with machine learning

Cosine similarity: 0.6847Other commonly occurring problems

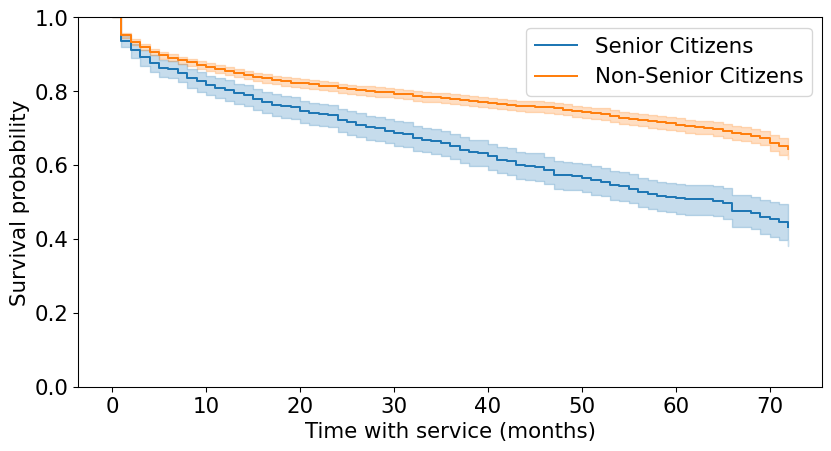

Example: Customer Churn

- Imagine that you are working for a subscription-based telecom company.

- They want to predict when a specific customer will churn so that they can come up with retention strategies for different customer segments.

- We want to model “time to churn” to understand different factors affecting customer churn.

- Is it possible to use machine learning to predict whether a specific customer will churn?

Customer Churn Dataset

- Let’s look at Customer Churn Dataset, which is collected at a fixed time.

| customerID | gender | SeniorCitizen | Partner | Dependents | tenure | PhoneService | MultipleLines | InternetService | OnlineSecurity | ... | DeviceProtection | TechSupport | StreamingTV | StreamingMovies | Contract | PaperlessBilling | PaymentMethod | MonthlyCharges | TotalCharges | Churn | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6464 | 4726-DLWQN | Male | 1 | No | No | 50 | Yes | Yes | DSL | Yes | ... | No | No | Yes | No | Month-to-month | Yes | Bank transfer (automatic) | 70.35 | 3454.6 | 0 |

| 5707 | 4537-DKTAL | Female | 0 | No | No | 2 | Yes | No | DSL | No | ... | No | No | No | No | Month-to-month | No | Electronic check | 45.55 | 84.4 | 0 |

| 3442 | 0468-YRPXN | Male | 0 | No | No | 29 | Yes | No | Fiber optic | No | ... | Yes | Yes | Yes | Yes | Month-to-month | Yes | Credit card (automatic) | 98.80 | 2807.1 | 0 |

| 3932 | 1304-NECVQ | Female | 1 | No | No | 2 | Yes | Yes | Fiber optic | No | ... | Yes | No | No | No | Month-to-month | Yes | Electronic check | 78.55 | 149.55 | 1 |

4 rows × 21 columns

Customer Churn Dataset

- What’s different here compared to predicting disease vs. no disease?

- Here, we are actually interested in the time until the event of churn is likely to occur.

| tenure | Churn | |

|---|---|---|

| 6464 | 50 | 0 |

| 5707 | 2 | 0 |

| 3442 | 29 | 0 |

| 3932 | 2 | 1 |

| 6124 | 57 | 0 |

Probability of survival

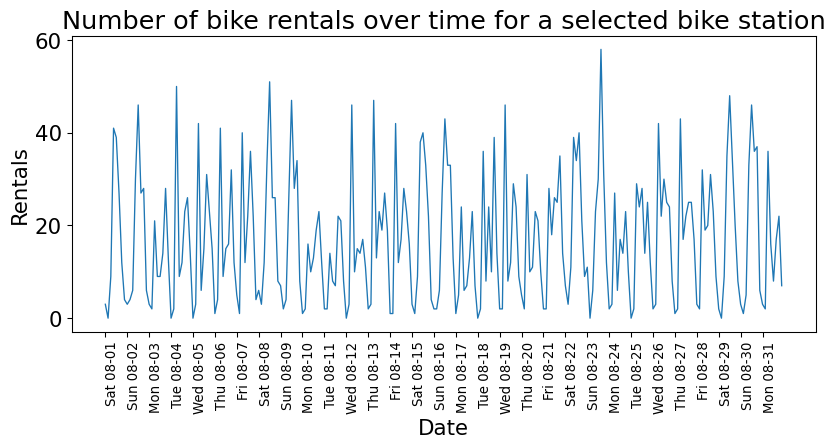

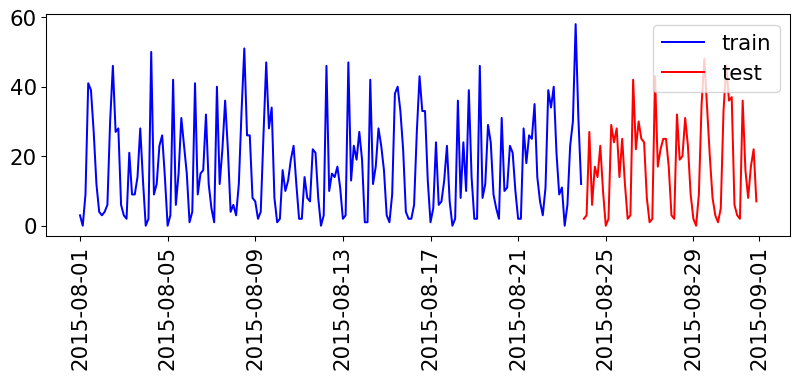

Example: Time series

starttime

2015-08-01 00:00:00 3

2015-08-01 03:00:00 0

2015-08-01 06:00:00 9

2015-08-01 09:00:00 41

2015-08-01 12:00:00 39

Freq: 3h, Name: one, dtype: int64

Train and test data

Predictions

Train-set R^2: 0.89

Test-set R^2: 0.84

Interactive: Is ML appropriate?

Exercise 1.1: ML or not?

Select all that apply: Which problems are suitable for ML?

- Automatically classifying microscope images of tissue samples into cell types

- Assisting researchers in finding relevant scientific papers for a literature review

- Determining authorship disputes in multi-author publications

- Detecting anomalies in sensor data from an environmental monitoring station

- Predicting the likelihood of a chemical reaction yielding a desired compound

Summary: When is ML suitable?

| Approach | Best Used When… |

|---|---|

| Machine Learning | The dataset is large and complex, and the decision rules are unknown, fuzzy, or too complex to define explicitly |

| Rule-based System | The logic is clear and deterministic, and the rules or thresholds are known and stable |

| Human Expert | The problem involves ethics, creativity, emotion, or ambiguity that can’t be formalized easily |

Activity 1: ML in Your Domain

Think of a problem from your own research that could be solved using ML and write it down. Address the following:

- What are you trying to accomplish? What would be the input and output?

- What would success look like?

- How do humans solve this now? Are there heuristics or rules?

- What kind of data do you have or could you collect?

We’ll revisit this activity later to explore how you might frame your problem using ML approaches.