| ml_experience | class_attendance | lab1 | lab2 | lab3 | lab4 | quiz1 | quiz2 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 92 | 93 | 84 | 91 | 92 | 90 |

| 1 | 1 | 0 | 94 | 90 | 80 | 83 | 91 | 84 |

| 2 | 0 | 0 | 78 | 85 | 83 | 80 | 80 | 82 |

| 3 | 0 | 1 | 91 | 94 | 92 | 91 | 89 | 92 |

| 4 | 0 | 1 | 77 | 83 | 90 | 92 | 85 | 90 |

| 5 | 1 | 0 | 70 | 73 | 68 | 74 | 71 | 75 |

| 6 | 1 | 0 | 80 | 88 | 89 | 88 | 91 | 91 |

Introduction to Machine Learning

Which cat do you think is AI-generated?

.

.

- A

- B

- Both

- None

- What clues did you use to decide?

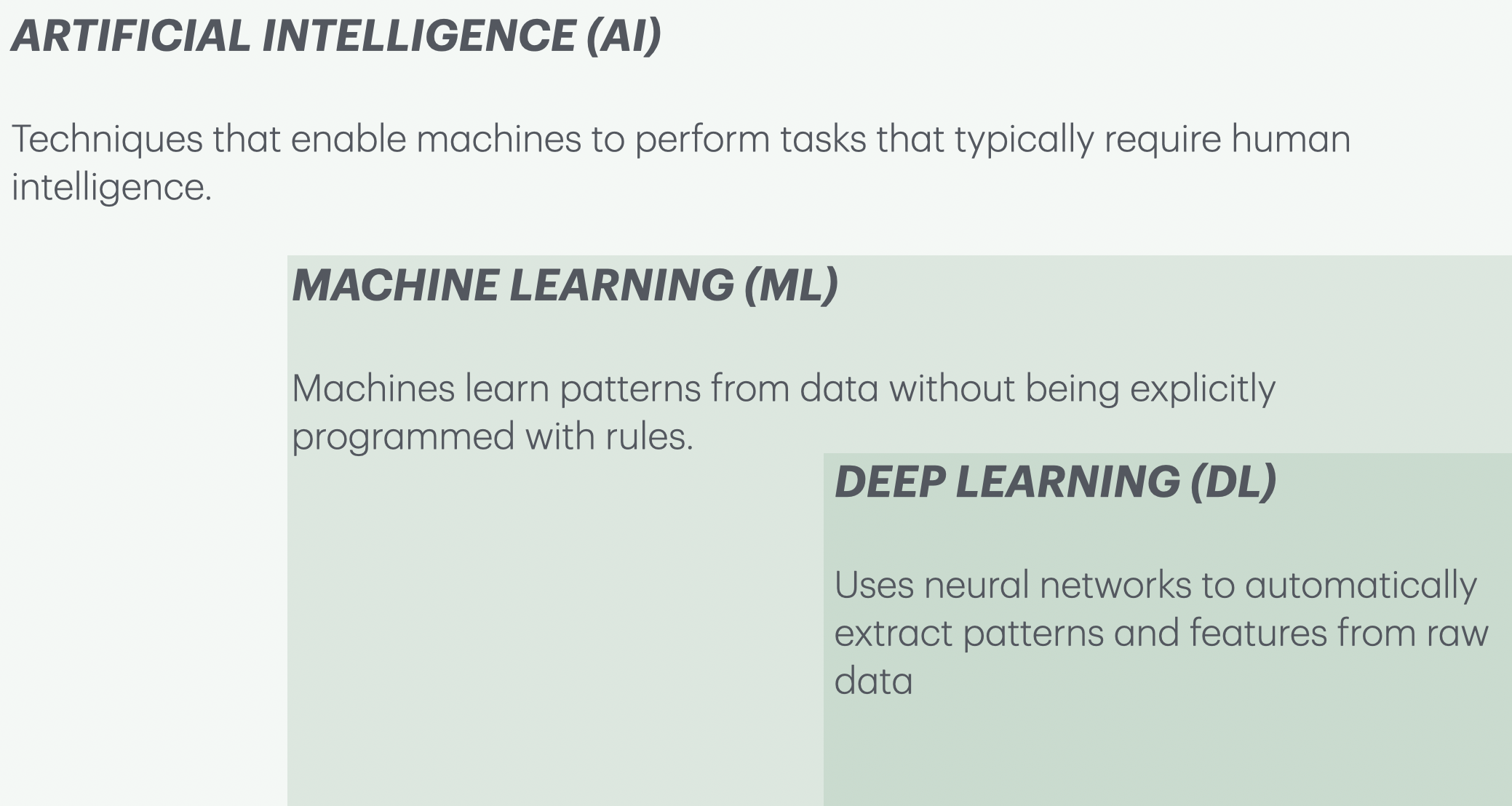

AI vs. ML vs. DL

- What is AI, and how does it relate to Machine Learning (ML) and Deep Learning (DL)?

{.nostretch fig-align=“center” width=“700px”}

{.nostretch fig-align=“center” width=“700px”}

Example: Image classification

- Have you used search in Google Photos? You can search for “cat” and it will retrieve photos from your libraries containing cats.

- This can be done using image classification.

Image classification

- Imagine you want to teach a robot to tell cats and foxes apart.

- How would you approach it?

AI approach: example

- You hard-code rules: “If the image has fur, whiskers, and pointy ears, it’s a cat.”

- This works for normal cases, but what if the cat is missing an ear? Or if the fox has short fur?

ML approach: example

- We don’t tell the model the exact rule. Instead, we give it labeled examples, and it learns which features matter most.

- small nose ✅

- round face ✅

- whiskers ✅

- Instead of giving rules, we let the model figure out the best combination of features from data.

DL approach: example

- The robot figures out the best features by itself using a neural network.

- Instead of humans selecting features, the neural network extracts them automatically, from edges to textures to full shapes.

- The more data it sees, the better it gets.

When is ML suitable?

- ML excels when the problem involve identifying complex patterns or relationships in large datasets that are difficult for humans to discern manually.

- Rule-based systems are suitable where clear and deterministic rules can be defined. Good for structured decision making.

- Human experts are good with problems which require deep contextual understanding, ethical judgment, creative input, or emotional intelligence.

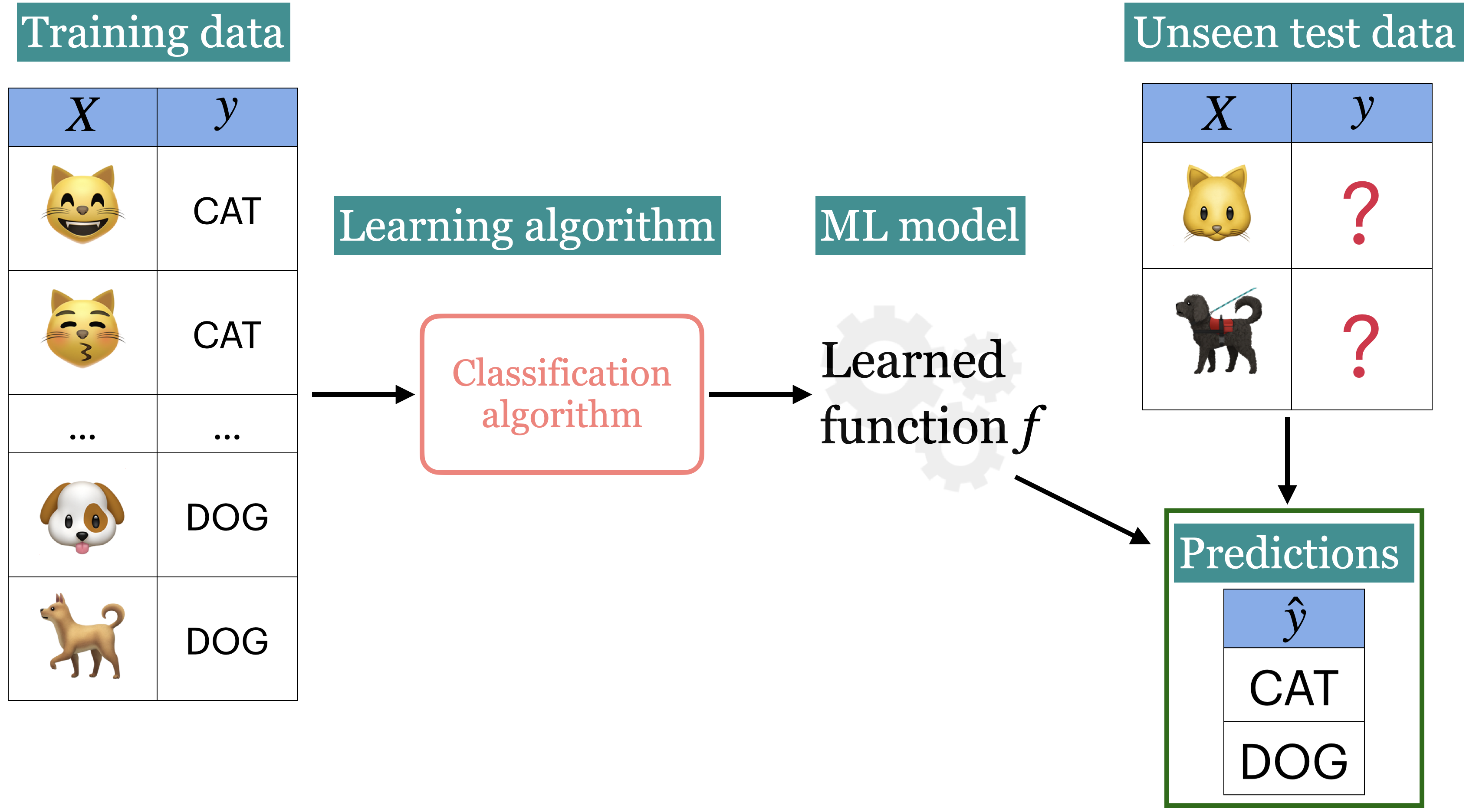

Supervised learning

- The most common type of machine learning is supervised learning.

- We aim to learn a function \(f\) that maps input features (\(X\)) to target values (\(y\)).

- Once trained, we use \(f(X)\) to make predictions on new, unseen data.

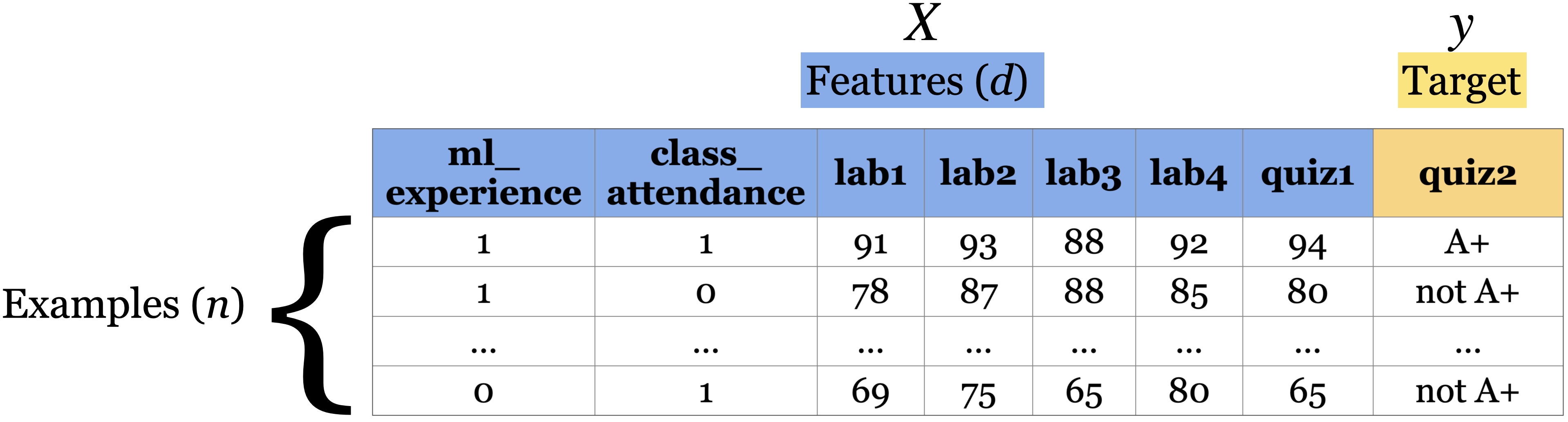

Scenario

Imagine you’re taking a course with four homework assignments and two quizzes. You’re feeling nervous about Quiz 2, so you want to predict your Quiz 2 grade based on your past performance. You collect data your friends who took the course in the past.

Terminology

Here are a few rows from the data.

- Features: relevant characteristics of the problem, usually suggested by experts (typically denoted by \(X\)).

- Target: the variable we want to predict (typically denoted by \(y\)).

- Example: A row of feature values

Running example

- Can you think of other relevant features for this problem?

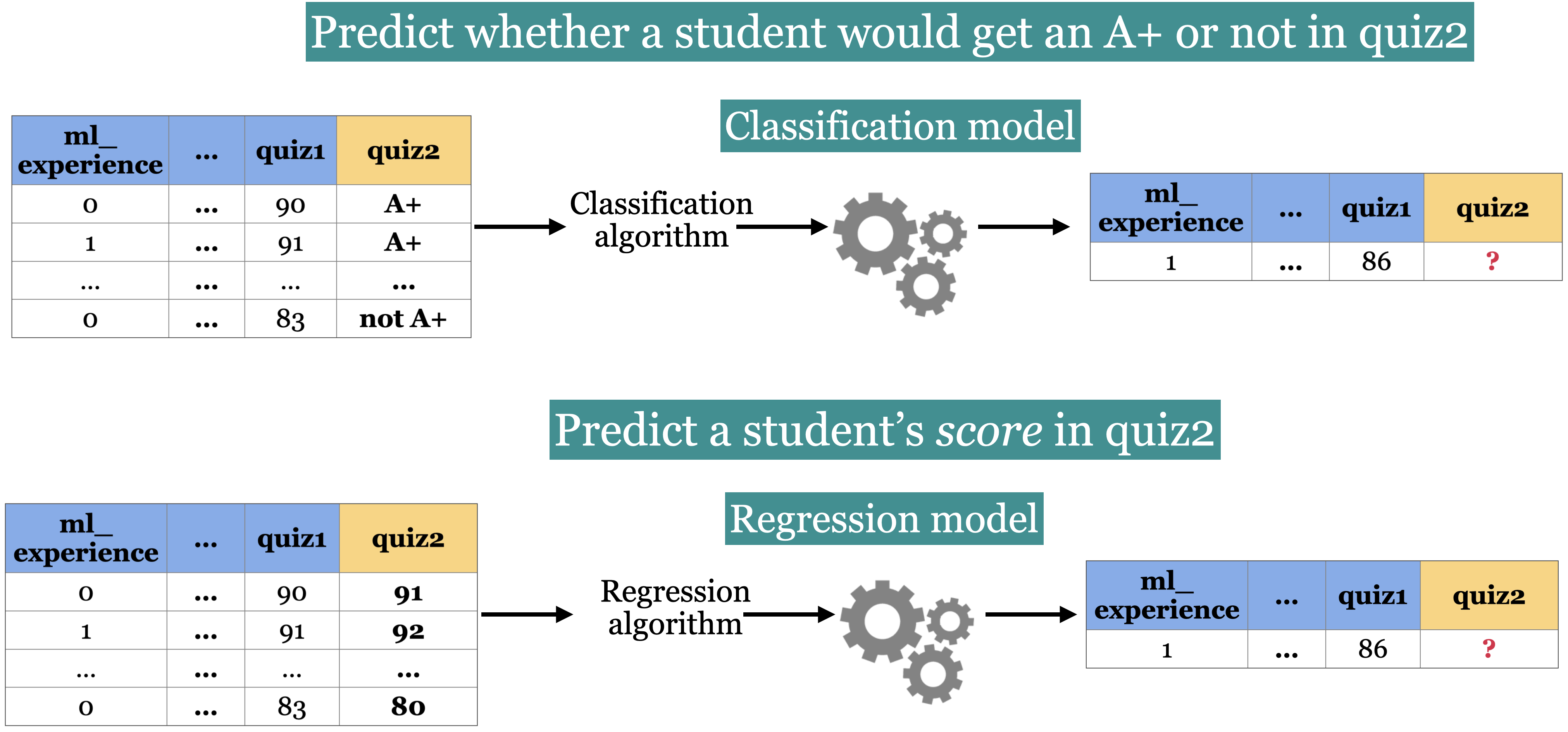

Classification vs. Regression

Training

- In supervised ML, the goal is to learn a function that maps input features (\(X\)) to a target (\(y\)).

- The relationship between \(X\) and \(y\) is often complex, making it difficult to define mathematically.

- We use algorithms to approximate this complex relationship between \(X\) and \(y\).

- Training is the process of applying an algorithm to learn the best function (or model) that maps \(X\) to \(y\).

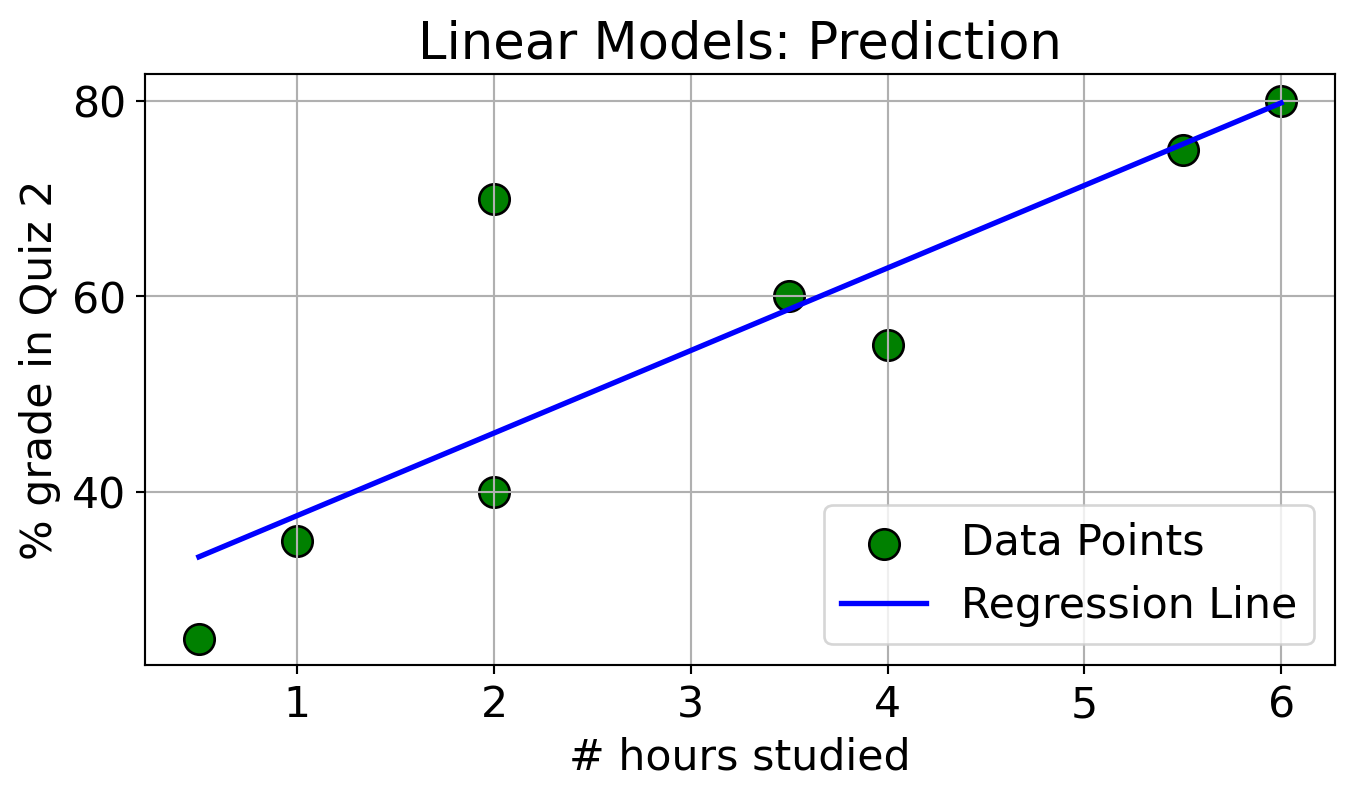

Linear models

- Linear models make an assumption that the relationship between

Xandyis linear. - In this case, with only one feature, our model is a straight line.

- What do we need to represent a line?

- Slope (\(w_1\)): Determines the angle of the line.

- Y-intercept (\(w_0\)): Where the line crosses the y-axis. This is also called the bias term

- Making predictions

- \(y_{hat} = w_1 \times \text{\# hours studied} + w_0\)

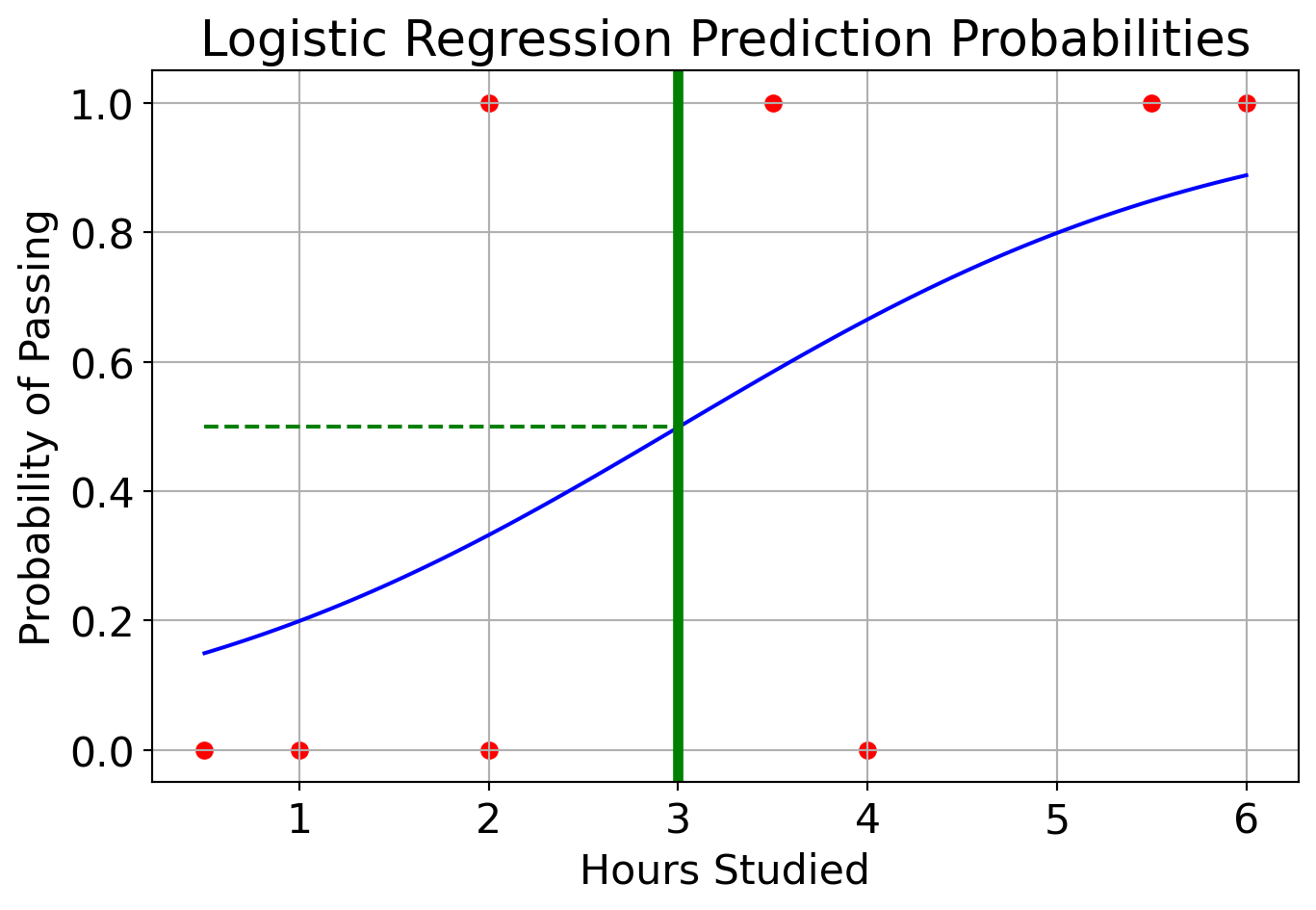

Logistic regression

- Suppose your target is binary: pass or fail

- Logistic regression is used for such binary classification tasks.

- Logistic regression predicts a probability that the given example belongs to a particular class.

- It uses Sigmoid function to map any real-valued input into a value between 0 and 1, representing the probability of a specific outcome.

- A threshold (usually 0.5) is applied to the predicted probability to decide the final class label.

Logistic regression

- Calculate the weighted sum \(z = w_1 \times \text{\# hours studied} + w_0\)

- Apply sigmoid function to get a number between 0 and 1.

- \(\hat{y} = \sigma(z) = \frac{1}{1 + e^{-z}}\)

- Model

- If you study \(\leq 3\) hours, you fail.

- If you study \(> 3\) hours, you pass.

A graphical view of a linear model

- We have 4 features: x[0], x[1], x[2], x[3]

- The output is calculated as \(y = x[0]w[0] + x[1]w[1] + x[2]w[2] + x[3]w[3]\)

- For simplicity, we are ignoring the bias term.

Sentiment Analysis: An Example

- Let us attempt to use logistic regression to do sentiment analysis on a database of IMDB reviews. The dataset is available here.

| review | label | review_pp | |

|---|---|---|---|

| 47278 | First of all,there is a detective story:"légi... | positive | First of all,there is a detective story:"légi... |

| 19664 | this attempt at a "thriller" would have no sub... | negative | this attempt at a "thriller" would have no sub... |

| 22648 | What's the matter with you people? John Dahl? ... | positive | What's the matter with you people? John Dahl? ... |

| 33662 | This is another one of those films that I reme... | positive | This is another one of those films that I reme... |

| 31230 | I love Ben Kingsley and Tea Leoni. However, th... | negative | I love Ben Kingsley and Tea Leoni. However, th... |

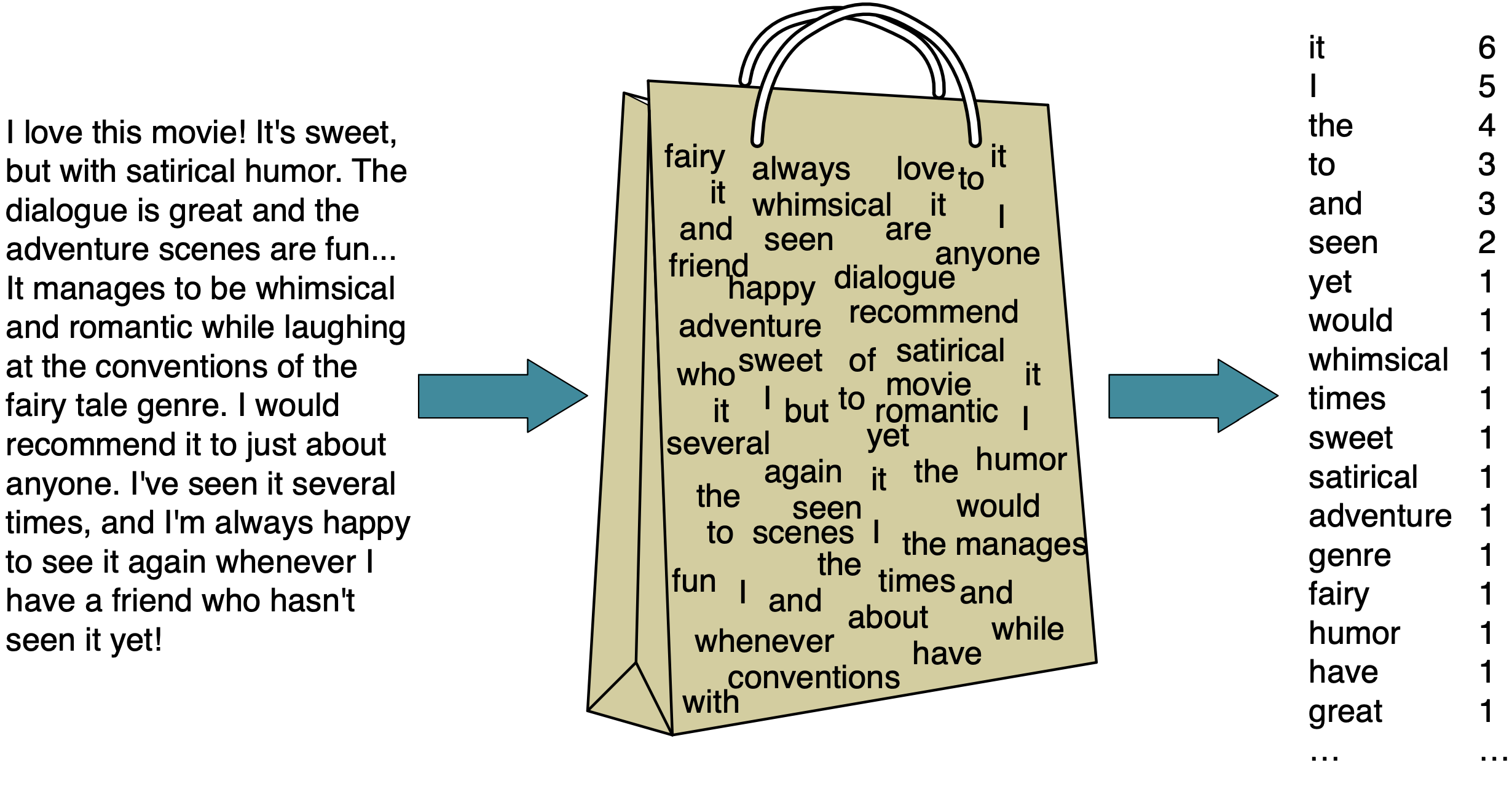

Bag of Words

- To create features that logistic regression can use, we will represent these reviews with a “bag of words” representation.

Bag of Words

- There are a total of 38867 “words” among the reviews.

- Most reviews contain only a small number of words.

| 00 | 000 | 007 | 0079 | 0080 | 0083 | 00pm | 00s | 01 | 0126 | ... | zurer | zuzz | zwart | zwick | zyada | zzzzip | zzzzz | â½ | â¾ | ã¼ber | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 38867 columns

Some words in the vocabulary

array(['00', 'affection', 'apprehensive', 'barbara', 'blore',

'businessman', 'chatterjee', 'commanding', 'cramped', 'defining',

'displaced', 'edie', 'evolving', 'fingertips', 'gaffers',

'gravitas', 'heist', 'iliad', 'investment', 'kidnappee',

'licentious', 'malã', 'mice', 'museum', 'obsessiveness',

'parapsychologist', 'plasters', 'property', 'reclined',

'ridiculous', 'sayid', 'shivers', 'sohail', 'stomaches', 'syrupy',

'tolerance', 'unbidden', 'verneuil', 'wilcox'], dtype=object)Investigating the model

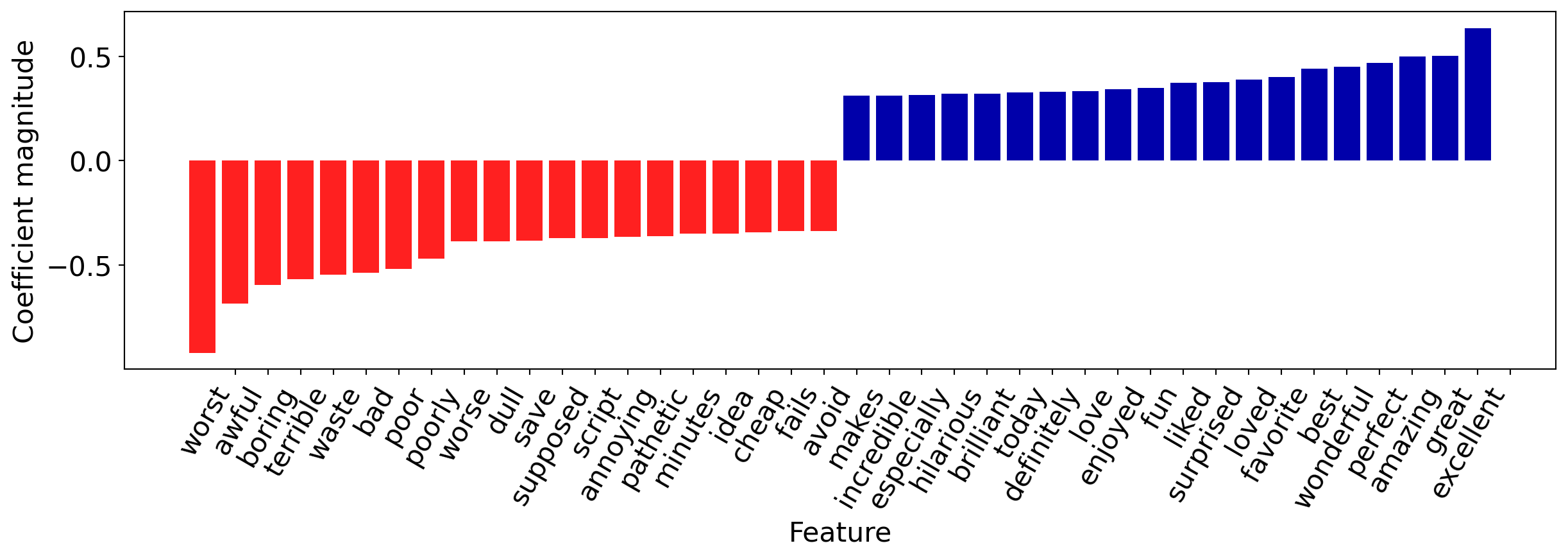

- Let’s see what associations our model learned.

| Coefficient | |

|---|---|

| excellent | 0.637051 |

| great | 0.501922 |

| amazing | 0.499925 |

| perfect | 0.470204 |

| wonderful | 0.450895 |

| ... | ... |

| waste | -0.545904 |

| terrible | -0.569702 |

| boring | -0.595568 |

| awful | -0.687145 |

| worst | -0.922031 |

32230 rows × 1 columns

- They make sense!

Investigating the model

Let’s visualize the 20 most important features.

Making predictions

Finally, let’s try predicting on some new examples.

fake_reviews = ["It got a bit boring at times but the direction was excellent and the acting was flawless. Overall I enjoyed the movie and I highly recommend it!",

"The plot was shallower than a kiddie pool in a drought, but hey, at least we now know emojis should stick to texting and avoid the big screen."

]

fake_reviews['It got a bit boring at times but the direction was excellent and the acting was flawless. Overall I enjoyed the movie and I highly recommend it!',

'The plot was shallower than a kiddie pool in a drought, but hey, at least we now know emojis should stick to texting and avoid the big screen.']- Here are the model predictions: